Bohrium + SciMaster AI Agent Competition

Making Creation Sustainable

This competition aims to promote the development of research AI agent technology, encourage innovative applications, and showcase excellent works. Welcome all individuals and teams interested in research AI agent development to participate!

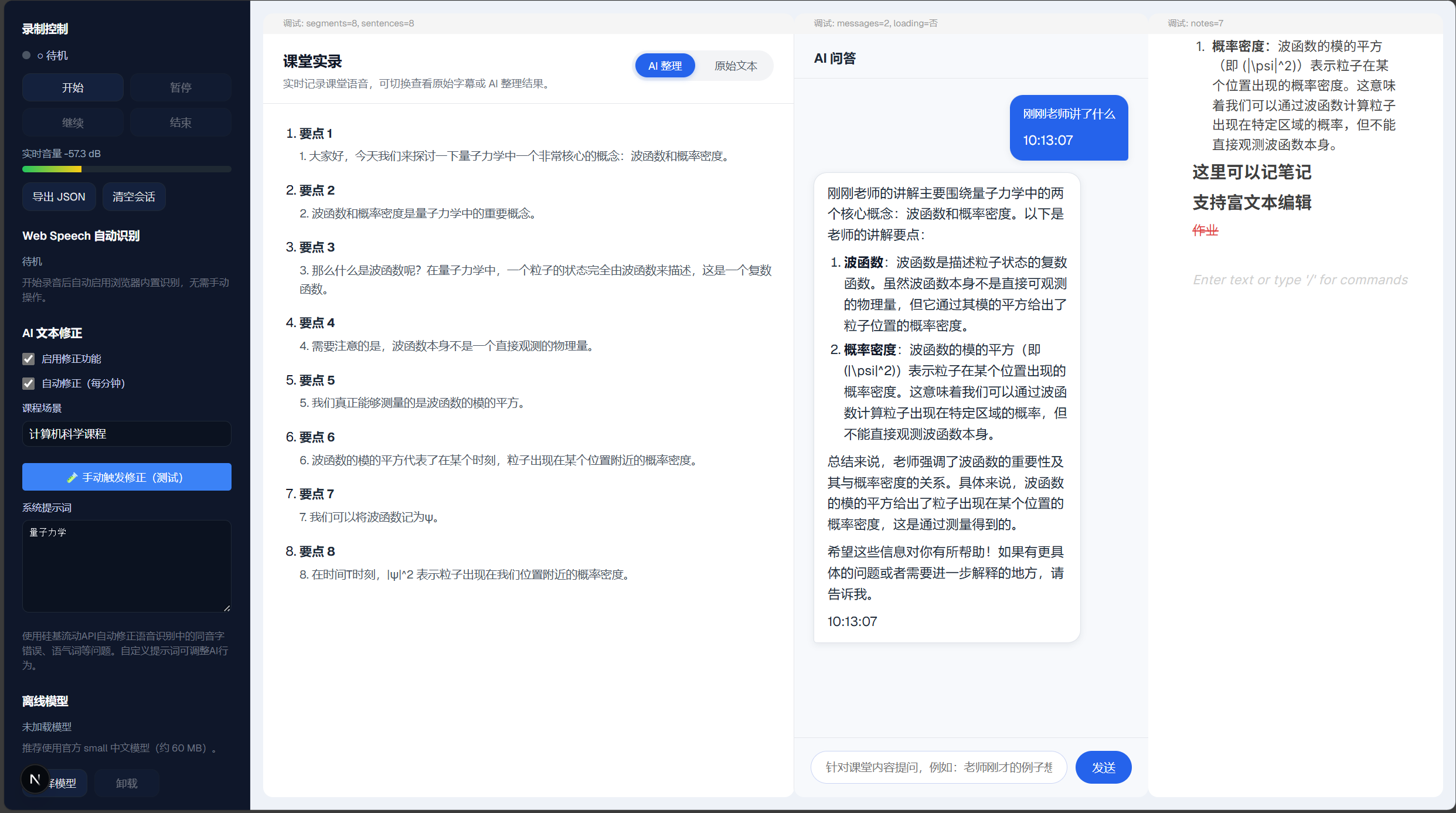

AI Agents Are Reshaping Research Paradigms

Society supports scientific research because exploration expands the boundaries of human knowledge, bringing technological innovation that benefits humanity. AI Agents are becoming the transformative engine of intelligent research.

Unlike traditional tools that provide point-based assistance, research AI agents can drive and integrate literature reading, model computation, experiments, and other workflows, truly achieving the transition from 'can do' to 'know how to do'. Scientists will have cross-domain, cross-system AI research partners to help integrate knowledge and solve problems; repetitive work will be completely freed, and innovative ideas will be proposed, verified, and iterated faster.

Research AI agents may introduce new evaluation and incentive models for intellectual achievements. The basic unit of work output gradually transforms from static papers to dynamic, executable agents. These agents form a real-time flowing value network through high-frequency mutual invocation.

Cross-domain Collaboration

AI research partners help integrate knowledge and solve interdisciplinary challenges

Efficiency Boost

Repetitive work is freed, innovative ideas are verified and iterated faster

Value Network

Agents invoke each other, forming a real-time flowing value network

New Evaluation

From static papers to dynamic agents, evaluation becomes more real-time and objective

Two Tracks, Independent Evaluation

Choose the most suitable track based on your expertise and application scenarios

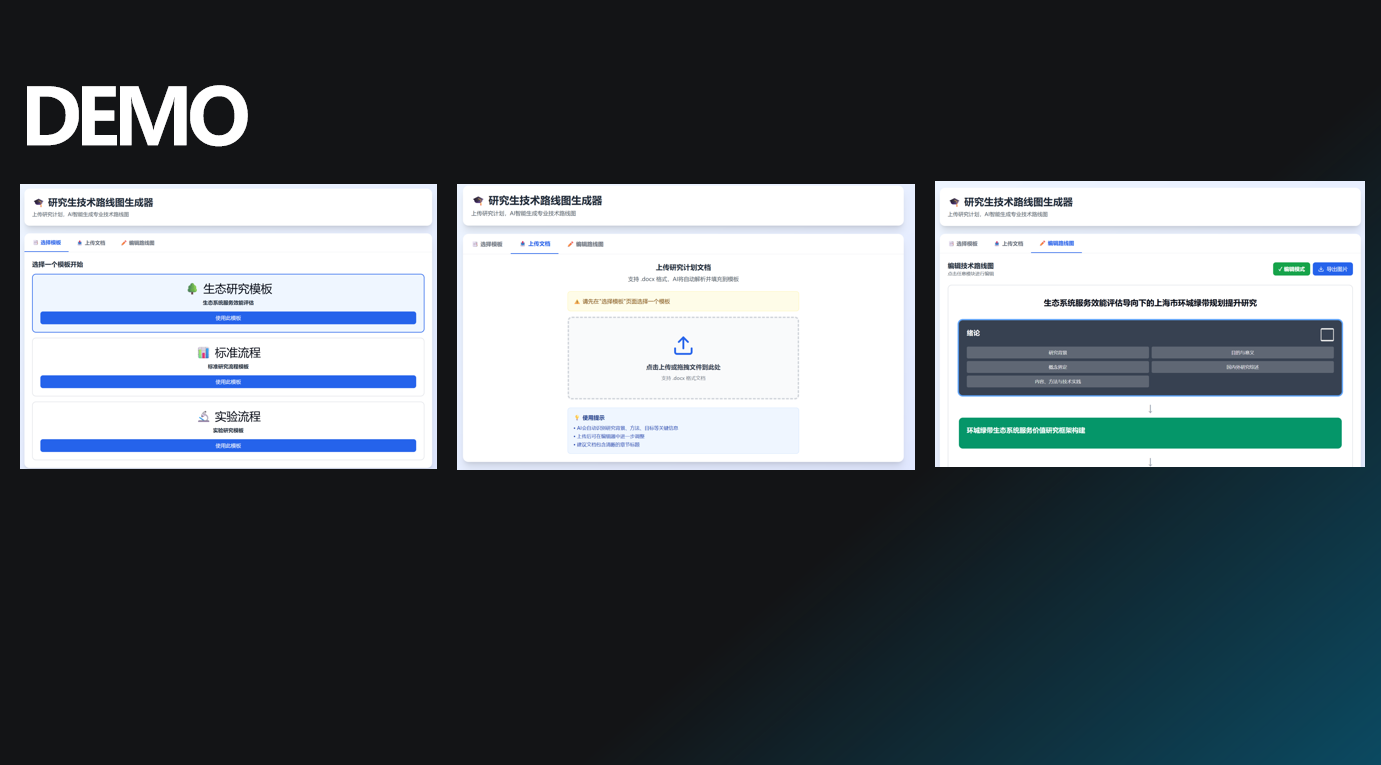

Deep Research Track

Domain-specific Applications

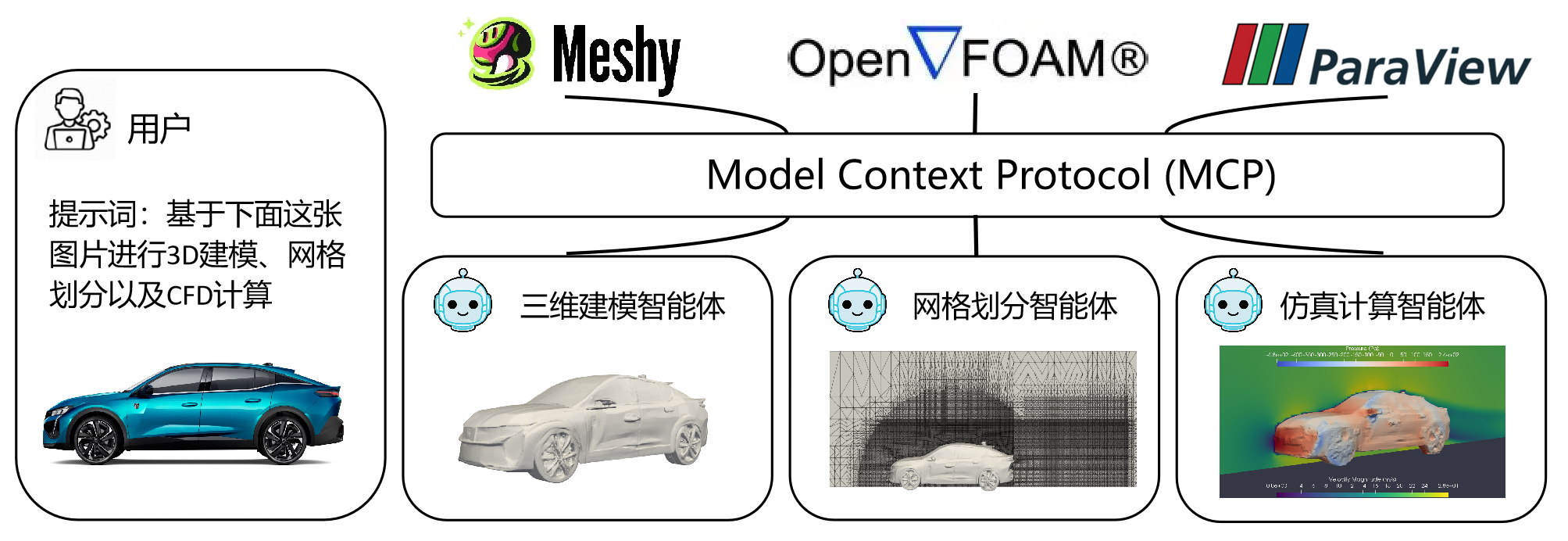

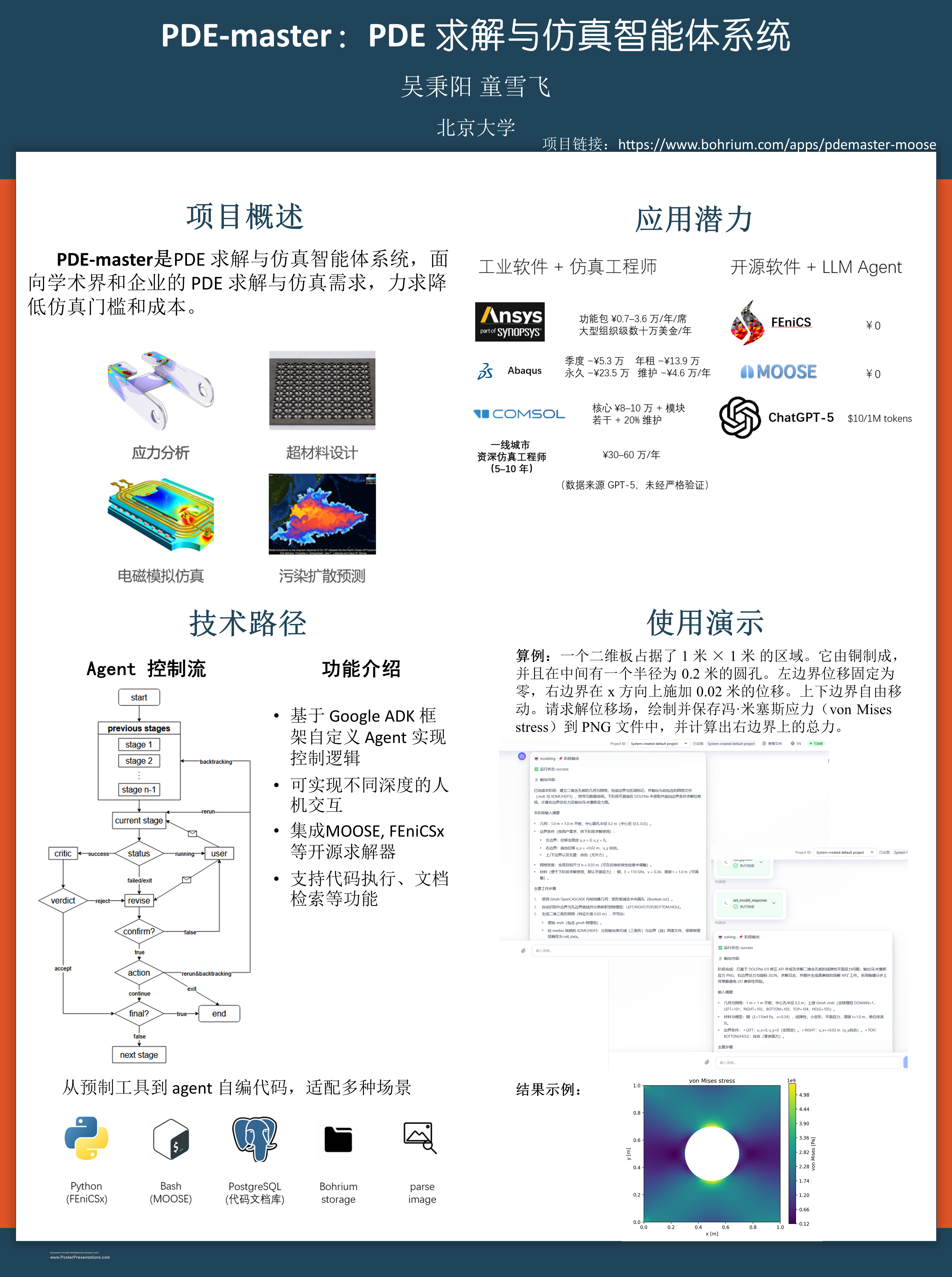

Focus on specific disciplines such as materials, chemistry, biology, and simulation to solve professional research problems

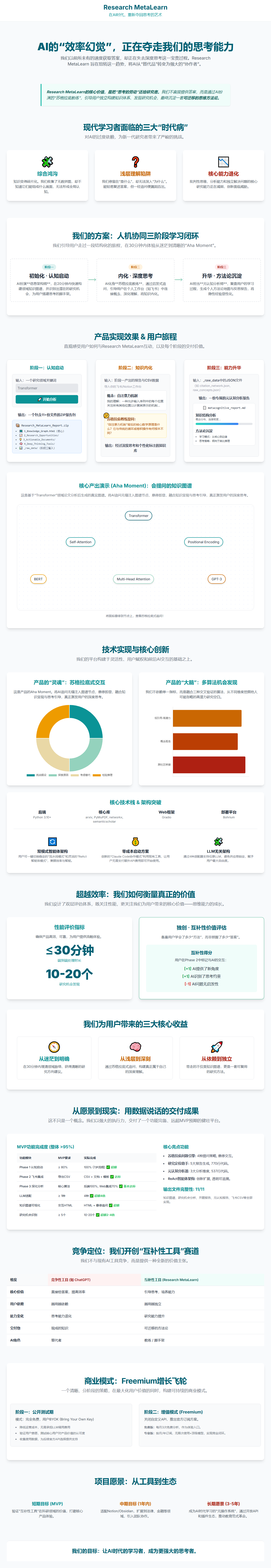

General Research Track

Cross-disciplinary Scenarios

Address general research scenarios across disciplines, including literature reading, data analysis, and experiment management

Total Prize Pool ¥1,000,000

Two independent tracks with generous prizes and continuous support for outstanding projects

Data-Driven + Expert Review

Unique dual evaluation system: real user feedback meets professional expert judgment

Platform Revenue Performance

Your work must not only tell a good story but also win users over. We evaluate the actual value of works through real platform data.

Professional Evaluation

A panel of experts from research and technology fields conducts professional evaluation from multiple dimensions.

The degree of innovation in technical approach, application scenarios, or solutions

Product maturity, stability, and user experience

Practicality and promotion potential in real research scenarios

Why This Evaluation Method?

We believe good research tools must not only be innovative but also truly help researchers solve problems. 50% objective data ensures works withstand market validation, while 50% expert review ensures technical depth and innovative value are recognized.

Open, Fair, Innovative

We welcome all individuals and teams interested in research agent development

Eligibility

Open to researchers and developers worldwide, with no restrictions on location or professional background

Originality

Submissions must be original work by participants, without plagiarism or infringement of intellectual property rights

Content Standards

Submissions must comply with relevant laws and regulations, and must not contain any illegal, inappropriate content (such as violence, pornography, discrimination, etc.)

Please refer to theofficial competition documentation for detailed rules. Contact the organizing committee if you have any questions

Competition Timeline

From ideation to implementation, supporting teams throughout the journey

Launch

Phase 1: Creative design, development attempts, and online submission. Demonstrate the core value of research AI agents.

Trials

Conducted as an online defense. Select 25 teams from 100+ entries to advance to finals.

Finals

Top 30 teams compete. Two tracks evaluated independently across multiple dimensions, including innovation, completion, and practical value.

Award Ceremony

Final rankings will be announced and prizes awarded at the Beijing Science Intelligence Summit.

Making Creation Sustainable

Competitions may have winners and losers, but good ideas should not be abandoned due to time constraints. Through this competition, we hope to incubate, grow, and sustain more excellent research AI agent projects.

Promote the transformation of research evaluation systems, making the value of intellectual achievements more real-time, objective, and accurate

200,000 yuan Incentive program fund pool, giving excellent projects a second chance and supporting long-term development